Description

The overarching learning goal of this class is to create an appreciation for the tight interplay between mechanism, sensor, and control in the design of intelligent systems. This includes (1) formally describing the forward and inverse kinematics of a mechanism, (2) understanding the sources of uncertainty in sensing and actuation as well as to describe them mathematically, (3) how to discretize the robot’s state and reason about it algorithmically, and (4) experiencing 1-3 in physic-based simulation or on a real robotic platform.

In Spring 2022, this class is taught as a “special topics” class CSCI4830/7000 to broadly explore the topic “Robotic Manipulation”.

Meet the Team

- Spring 2022

- Instructor: Nikolaus Correll

Syllabus

Understand the specific challenges in robotic manipulation, ranging from mechanisms to sensing, perception, and control.

| Week | Content | Reading | Lab |

|---|---|---|---|

| 1 | Manipulation overview | Robots getting a grip on manipulation, IROS 2020 keynote | Introduction to Webots |

| 2 | Coordination systems and frames of reference | Section 2.3-4 | Inverse kinematics |

| 3 | Forward and inverse kinematics of manipulators | Sections 3.1-3 | Inverse kinematics |

| 4 | Forces | Chapter 4 | Inverse kinematics |

| 5 | Grasping | Chapter 5 | Grasping |

| 6 | Actuation for manipulation | Chapter 6, “Systems, devices, components, and methods for a compact robotic gripper with palm-mounted sensing, grasping, and computing devices and components.” U.S. Patent No. 11,148,295 | Grasping |

| 7 | Sensors for manipulation | Chapter 7 | Oriented grasping |

| 8 | Vision for manipulation | Chapter 8 | Collision avoidance |

| 9 | Features: segmentation and PCA | Chapter 9, Section B.5 | Object recognition |

| 10 | Task execution using Behavior Trees | Chapter 11 | Object recognition |

| 11 | Manipulation planning | Chapter 14 | Bin picking |

| 12 | Uncertainty | Chapter 16 | Bin picking |

| 13 | Project | Chapter E | Project |

| 14 | Project | no reading | Project |

| 15 | Project presentations | no reading | Project |

Labs are due Wednesday evening at 6pm prior to the begin of the next lab. There are no extensions. There is no collaboration on labs allowed. We will be working on labs together in class and you are welcome to discuss your approach with fellow students, but each submission needs to be your own. (Plagiarism is enforced using automated tools.) The project instead is encouraged to be a team effort.

Lab 0: Introduction to Webots (Manipulation)

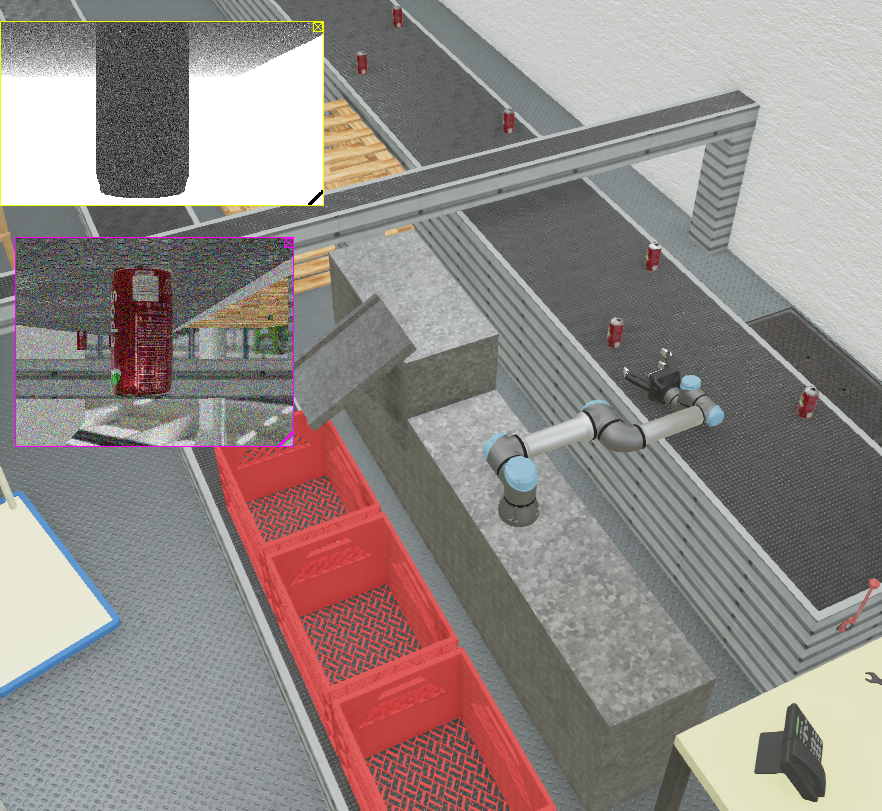

The goal of this lab is to understand the architecture and key data structures in Webots and how to access them from Python. You will augment a manipulating arm with a 3D camera and visualize its data stream in a Python notebook.

Lab 1: Inverse kinematics

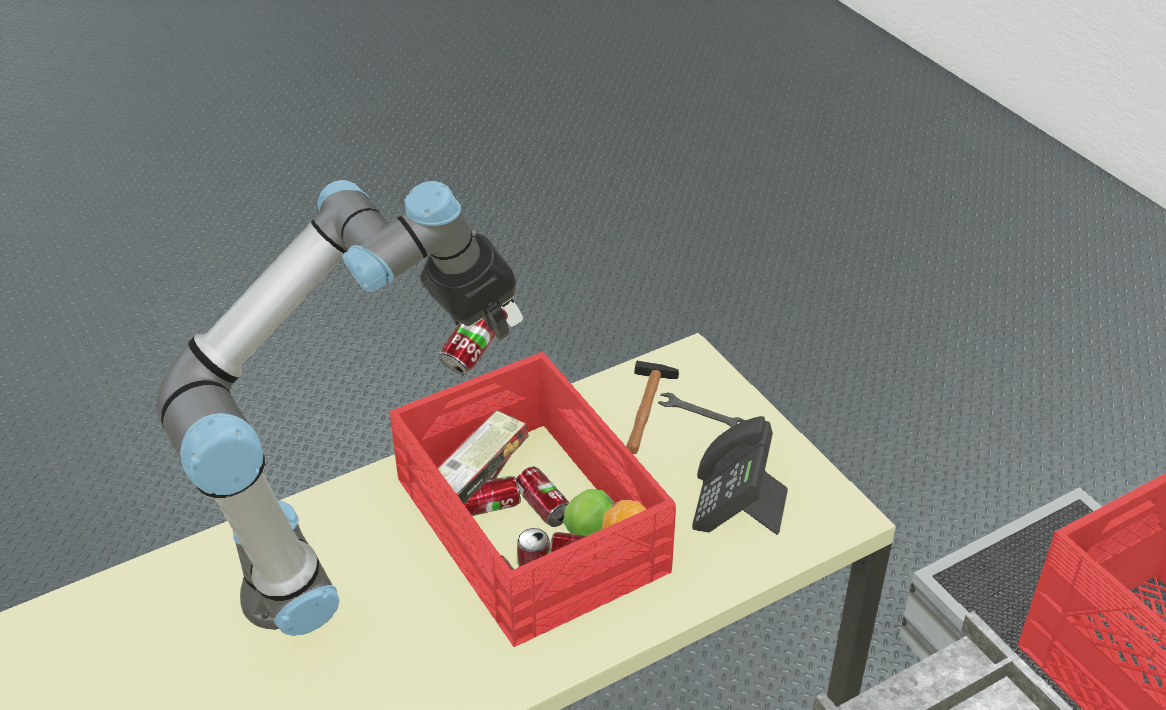

The goal of this lab is to implement basic inverse kinematics that allows a Universal Robot UR5 to move from point to point following the shortest path in Cartesian and Joint space (akin to the “moveL” and “moveJ” that the real UR5 API provides). You will first implement a closed-form solution of the UR5’s inverse kinematics and then implement a basic trajectory following algorithm. These commands will be demonstrated by moving the robot above a red ball placed underneath it. This will require mounting a stereo camera at the robot’s end-effector and implementation of basic image processing routines.

Lab 2: Simple Grasping

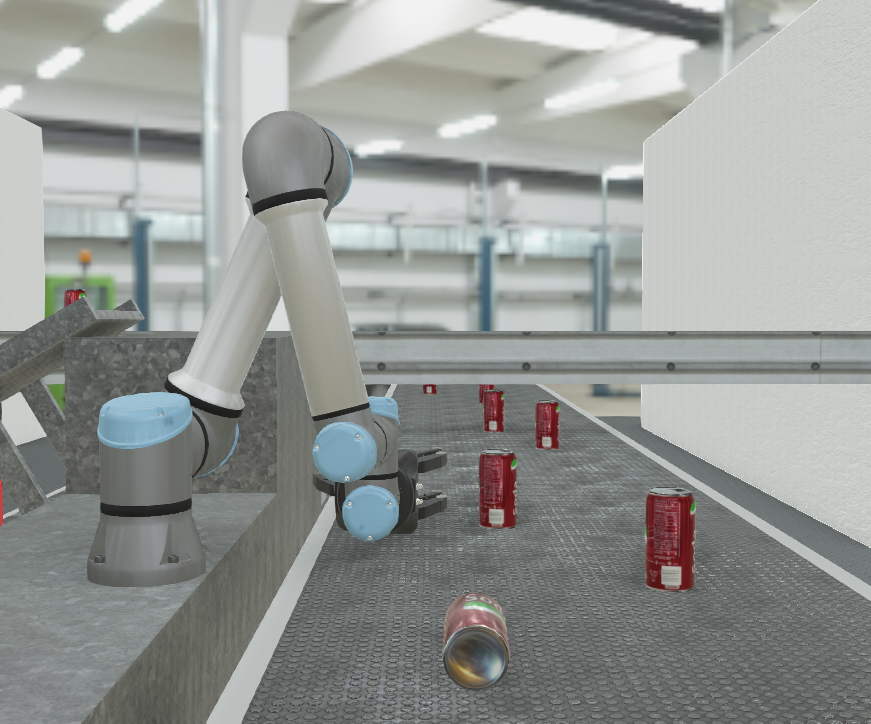

The goal of this lab is to implement basic point cloud processing algorithms to detect and localize the cans as they come on the conveyor belt. This lab requires a working implementation of inverse kinematics, trajectory controller, and state machine from labs 0 and 1.

Lab 3: Oriented Grasping

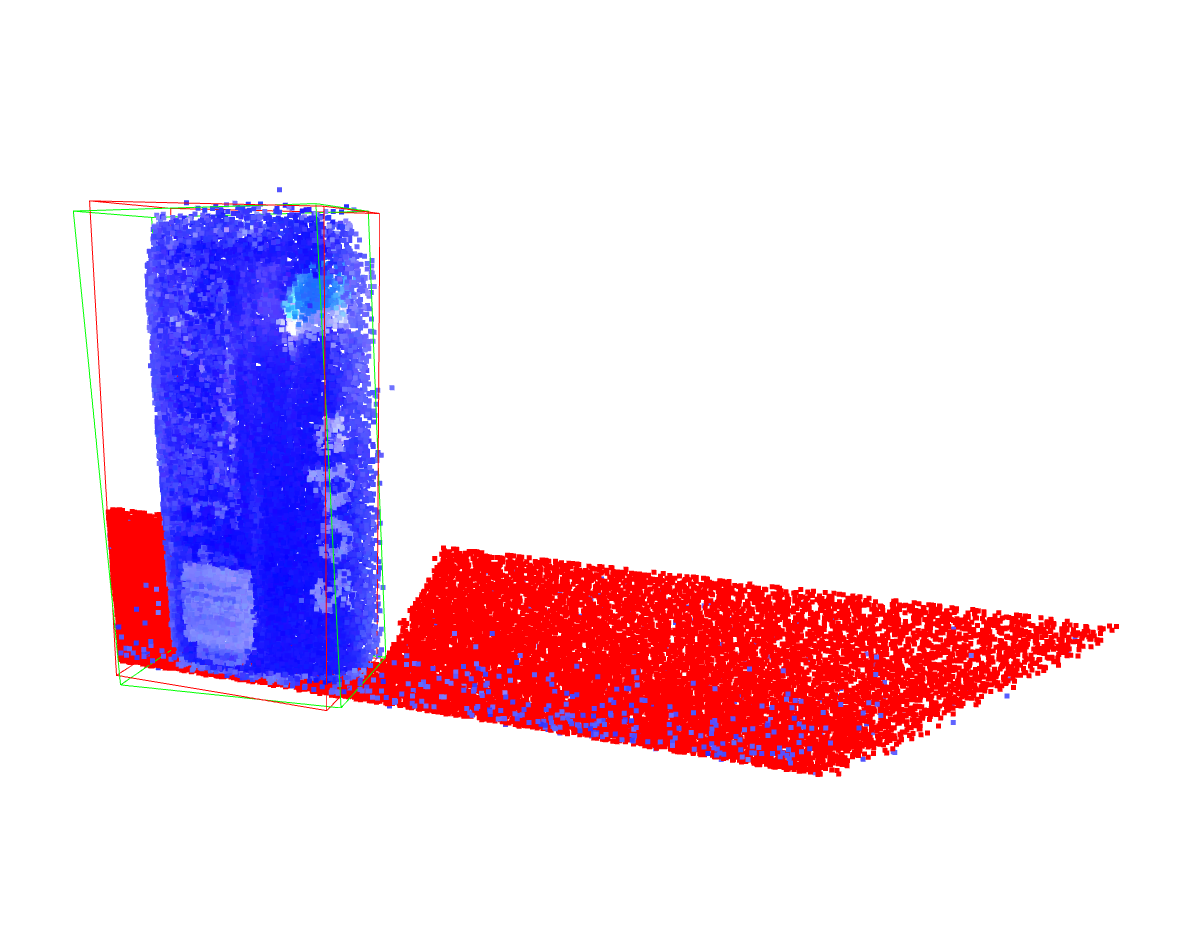

The goal of this lab is to translate 3D object poses into relevant grasp poses using principal component analysis to identify an object’s oriented bounding box. We will assume that perception is perfect.

Lab 4: Collision avoidance

The goal of this lab is to implement a simple collision avoidance pipeline. The UR5 is positioned above a bin with known dimensions. You will first model the bin and the robot end-effector in a simple physics simulator (bullet). Here, the bin dimensions are given and you simply need to detect the bin in the 3D camera data.

Lab 5: Object recognition

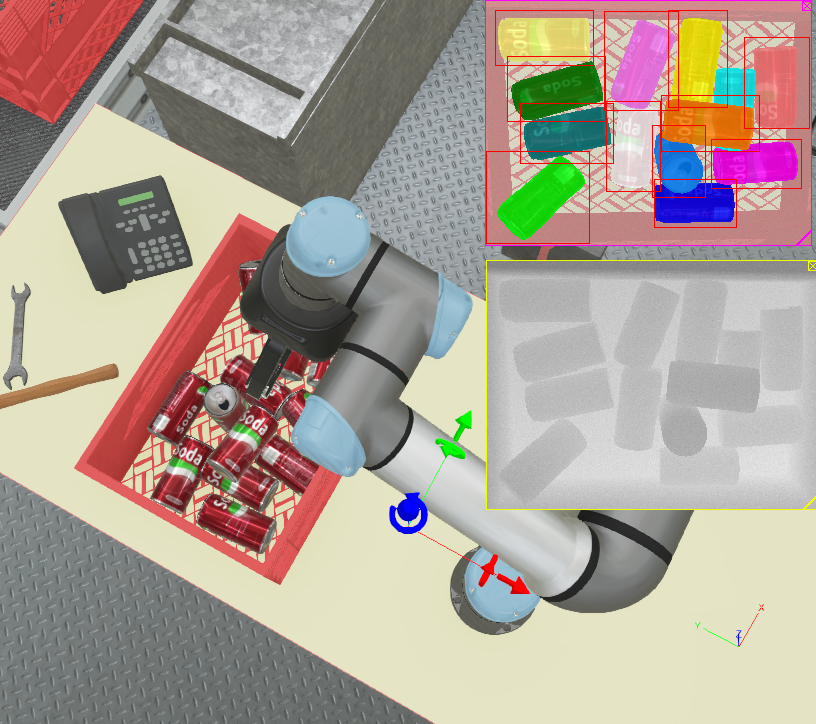

The goal of this lab is to identify individual objects in dense, cluttered environments such as a bin. Implement a simple segmentation algorithm (DBSCAN) to identify individual objects in the environment.

Lab 6: Bin picking

Use your object recognition, collision avoidance, and inverse kinematic code to implement a Behavior Tree for bin picking.

Project

You have developed a complete solution for bin picking, starting with object segmentation, grasp generation, collision avoidance, and dealing with uncertainty. Do you have an idea on how to improve one of these components? Did you come across an interesting method that you wish to test? Your project will require a clear hyptothesis and an experimental design in Webots. The final deliverable is a paper (4-6 pages, IEEE double column format) that describes your experimental setup and your findings.

Grading

Lab (50%), Final Project (35%), Attendance/Participation (15%)

Textbook

“Introduction to Autonomous Robotics” by Nikolaus Correll, Bradley Hayes, Christoffer Heckman, and Alessandro Roncone [LINK]

Notes on Webots

-

Webots is available as binary for Windows, Linux and Mac from www.cyberbotics.com. You will need to install a python interpreter and setup Webots to use it.

-

Follow the instructions in the Webots Reference Manual to understand how to use external controllers in order to use Jupyter lab, ipython or other tools with better debugging capabilities.